Views: 19 Author: Site Editor Publish Time: 2025-09-10 Origin: Site

Yes, a server CPU/GPU waterblock can effectively prevent AI cluster overheating issues by providing superior, direct-to-chip thermal management that dissipates intense heat (e.g., 385W per component) far more efficiently than air cooling. This prevents thermal throttling, ensures sustained peak performance for AI workloads, and maintains system stability, which is critical for the continuous operation of high-density AI clusters.

The rapid rise of Artificial Intelligence (AI) has driven server CPUs—and especially GPUs—to their absolute limits. Within dense AI clusters, these processors generate extraordinary levels of heat that often cause thermal throttling and system instability. Traditional air cooling can no longer keep pace. To overcome these challenges, server CPU/GPU waterblocks provide a direct and powerful solution, ensuring consistent performance and reliability even under extreme workloads.

How Do Server CPU/GPU Waterblocks Combat Intense AI Heat Loads?

What Specific Performance Benefits Do Waterblocks Offer AI Clusters?

How Do Waterblocks Enhance Reliability and Longevity in AI Environments?

What Considerations Are Important When Implementing Waterblocks for AI Clusters?

AI clusters aren't just collections of powerful servers; they're highly specialized, densely packed computational powerhouses designed for continuous, intensive workloads. This unique operational profile creates thermal challenges that far exceed those of general-purpose data centers.

AI clusters face unique overheating challenges due to the sustained, near-100% utilization of high-TDP GPUs and CPUs, which generate concentrated heat loads (e.g., 700W+ per GPU). Their high-density configurations limit airflow, making traditional air cooling insufficient to prevent thermal throttling and maintain stable operating temperatures, leading to performance degradation and system instability.

The core of an AI cluster's overheating problem stems from the nature of AI workloads themselves. Unlike typical server tasks that might have fluctuating loads, AI training, inference, and large language model (LLM) processing often demand near-100% utilization of both CPUs and GPUs for extended periods, sometimes days or weeks on end. This sustained, maximum load means that these components are constantly generating their peak Thermal Design Power (TDP).

Consider a modern AI GPU like an NVIDIA H100 or A100, which can have a TDP ranging from 350W to over 700W. A single server might house 4 to 8 such GPUs, alongside high-performance CPUs, collectively generating thousands of watts of heat within a very confined space. For example, an AI cluster running a complex deep learning model will push every GPU to its thermal limits, generating a constant, intense heat output. Traditional air coolers, even large ones, struggle to dissipate this kind of sustained, concentrated heat effectively, leading to a rapid rise in component temperatures. This is precisely where a server CPU/GPU waterblock, designed to handle such extreme heat loads (e.g., a 385W TDP waterblock), becomes indispensable.

Component Type | Typical TDP Range (Air-Cooled) | Typical TDP Range (Liquid-Cooled) | Overheating Risk (Air) |

Server CPU | 150W - 300W | 200W - 400W+ | Moderate to High |

AI GPU | 300W - 700W+ | 400W - 1000W+ | Very High |

AI clusters are designed for maximum computational density. This means packing as many powerful GPUs and CPUs as possible into each server chassis and then cramming as many of these servers as possible into each rack. While this maximizes compute power per square foot, it severely restricts airflow, exacerbating the overheating problem.

In a densely packed GPU server rack, the space between components and servers is minimal. Air, even when moved by powerful fans, struggles to penetrate all the nooks and crannies, creating "hot spots" where heat accumulates. The hot exhaust air from one server can become the intake air for the next, leading to a cascading thermal problem. For instance, a 4U server with 8 high-TDP GPUs might have its internal fans working overtime, but the sheer volume of heat generated overwhelms the air's capacity to carry it away. This often results in the server's internal temperature rising, forcing the GPUs to throttle down.

A server CPU/GPU waterblock directly addresses this by removing heat at the source, before it can even enter the server's internal air stream. This significantly reduces the ambient temperature within the server chassis and the rack, making the overall thermal environment much more manageable. This is why liquid cooling for AI is not just an option, but a necessity for high-density AI data centers to prevent widespread overheating issues.

When faced with the extreme heat generated by an AI cluster, traditional air cooling often falters. Server CPU/GPU waterblocks offer a fundamentally different and far more effective approach, leveraging the superior heat transfer properties of liquid to directly combat intense heat loads.

Server CPU/GPU waterblocks combat intense AI heat loads by utilizing highly conductive copper and an optimized internal micro-channel design to directly absorb heat (e.g., 385W) from the processor and transfer it to a liquid coolant. This direct, efficient heat exchange (with R-ca as low as 0.028°C/W) rapidly removes heat from the component, preventing thermal runaway and maintaining stable, low operating temperatures crucial for sustained AI performance.

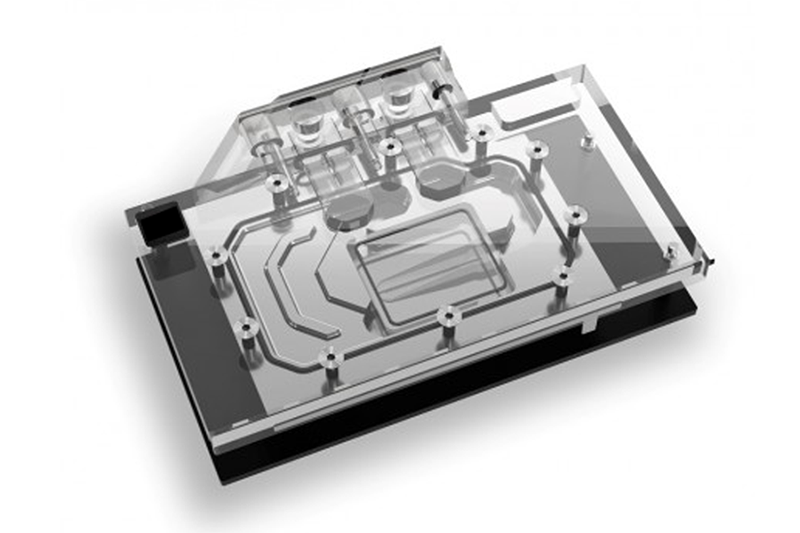

The core principle behind how server CPU/GPU waterblocks combat intense AI heat loads is direct-to-chip heat removal. Instead of relying on air to passively absorb heat from a finned heatsink, a waterblock places a highly thermally conductive material (typically copper) directly onto the processor's Integrated Heat Spreader (IHS). This copper base then has internal channels through which a liquid coolant flows.

Liquid, particularly water-based coolants, has a significantly higher specific heat capacity and thermal conductivity compared to air. This means liquid can absorb and transport far more heat energy per unit volume than air. For example, water's specific heat capacity is roughly 4 times that of air, and its thermal conductivity is about 25 times greater. This allows a custom copper liquid cold plate to rapidly pull heat away from a high-TDP AI GPU (e.g., 700W+) or CPU (e.g., 300W+). The heat is then carried away by the flowing liquid to a radiator, often located outside the server or rack, where it can be dissipated more effectively.

This direct and efficient heat transfer is crucial for AI clusters because it prevents heat from building up at the source. For instance, a GPU waterblock designed for a 385W TDP component with an R-ca of 0.028°C/W can maintain the GPU's temperature significantly lower than an air cooler, even under continuous, heavy AI workloads. This direct removal means the heat never enters the server's internal air stream, reducing the overall thermal load on the server and the data center's HVAC system.

Property | Air (at STP) | Water (at 25°C) | Benefit for Waterblocks |

Specific Heat (J/kg·K) | ~1000 | ~4180 | Can absorb ~4x more heat per unit mass. |

Thermal Conductivity (W/m·K) | ~0.026 | ~0.6 | Can conduct ~25x more heat. |

Density (kg/m³) | ~1.2 | ~997 | Can transport more heat in a smaller volume. |

The effectiveness of server CPU/GPU waterblocks in combating intense AI heat loads is also a testament to precision engineering and advanced manufacturing. It's not enough to simply have copper and liquid; the design must optimize every aspect of heat transfer.

Ultra-Flat Mating Surface: The base of a CPU waterblock or GPU waterblock must be incredibly flat and smooth to ensure maximum contact with the processor's IHS. Any microscopic gaps filled with air act as insulators, severely degrading performance. High-precision CNC machining, like that employed by KINGKA, achieves the micron-level flatness required for optimal thermal interface material (TIM) application and heat transfer.

Optimized Internal Micro-Channels: The internal fluid channels are meticulously designed to maximize the surface area exposed to the coolant while minimizing flow restriction (pressure drop). These micro-channels create turbulence, which enhances heat transfer from the copper to the liquid. For example, a waterblock might be designed to achieve its optimal 385W dissipation at a specific flow rate of 1.0 LPM and a pressure drop of 3.60 psi, indicating a carefully balanced design.

Robust Sealing: Given that liquid and electronics don't mix, the sealing of the waterblock is paramount. Advanced techniques like vacuum brazing (for copper) or Friction Stir Welding (FSW for aluminum) ensure leak-proof integrity, which is non-negotiable for the reliability of an AI cluster.

This level of precision ensures that the server CPU/GPU waterblock can consistently and reliably remove the massive heat generated by AI workloads, preventing overheating and allowing the AI cluster to operate at its full potential without compromise.

For AI clusters, performance is paramount. Overheating doesn't just cause instability; it directly impacts the speed and efficiency of AI model training and inference. Server CPU/GPU waterblocks offer specific, measurable performance benefits that are critical for maximizing the output of these demanding computational systems.

Server CPU/GPU waterblocks offer specific performance benefits to AI clusters by preventing thermal throttling, enabling sustained peak clock speeds for GPUs and CPUs, and ensuring consistent, predictable performance during intensive AI workloads. This directly translates to faster model training times, higher inference throughput, and maximized computational output, making them essential for high-performance AI data centers.

One of the most significant performance benefits of server CPU/GPU waterblocks for AI clusters is the elimination of thermal throttling. When a CPU or GPU reaches a predefined temperature limit, it automatically reduces its clock speed and power consumption to prevent damage. While this protects the hardware, it severely degrades performance, effectively wasting computational cycles.

In an AI cluster running a deep learning training job, thermal throttling can add hours or even days to the completion time. A GPU server with air-cooled GPUs might start strong, but as temperatures rise under sustained load, the GPUs will throttle, slowing down the entire training process. A server CPU/GPU waterblock, by contrast, maintains consistently low operating temperatures, allowing the processors to run at their maximum boost clocks for the entire duration of the workload.

For example, an NVIDIA H100 GPU might have a base clock of 1.7 GHz and a boost clock of 2.0 GHz. Under air cooling, it might only sustain 1.8 GHz due to thermal limits. With a GPU waterblock, it can consistently maintain 2.0 GHz or higher, leading to a significant increase in floating-point operations per second (FLOPS). This direct performance boost means AI models train faster, iterations are quicker, and researchers can achieve results more rapidly, directly impacting the efficiency and output of the AI data center.

Cooling Method | GPU Clock Speed (Sustained Load) | AI Training Time | Inference Throughput |

Air Cooling | Reduced (due to throttling) | Longer | Lower |

Liquid Cooling | Max Boost Clock (consistent) | Shorter | Higher |

Beyond just raw speed, server CPU/GPU waterblocks provide the crucial benefit of consistent and predictable performance for AI workloads. In an AI cluster, where multiple GPUs and CPUs work in parallel, inconsistent performance from even a few components can disrupt the entire cluster's synchronization and efficiency.

Thermal throttling can be unpredictable, varying based on ambient temperatures, dust buildup, or slight differences in individual component thermals. This variability makes it difficult to accurately estimate workload completion times or ensure that all components are contributing equally to the computation. With CPU waterblocks and GPU waterblocks, the operating temperatures are much more stable and uniform across all components. This consistency ensures that:

Workloads Complete on Time: Predictable performance allows for more accurate scheduling and resource allocation within the AI cluster.

Optimal Resource Utilization: All GPUs and CPUs contribute at their maximum potential, preventing bottlenecks caused by underperforming, throttled components.

Simplified Management: IT staff spend less time troubleshooting thermal issues and more time managing the actual AI workloads.

For instance, in a distributed AI training scenario, if one GPU server experiences thermal throttling, it can slow down the entire distributed training process. A custom copper liquid cold plate ensures that each GPU in the cluster performs optimally, leading to a more efficient and reliable overall training environment. This consistency is invaluable for large-scale AI operations where every second of compute time is critical.

The intense, continuous operation of AI clusters places immense stress on hardware, making reliability and longevity paramount. Overheating is a primary culprit for component degradation and failure. Server CPU/GPU waterblocks are not just about performance; they are a critical investment in the long-term health and stability of an AI infrastructure.

Server CPU/GPU waterblocks enhance reliability and longevity in AI environments by maintaining consistently lower and more stable operating temperatures, significantly reducing thermal stress and degradation on high-value CPUs and GPUs. This prevents premature component failure, extends hardware lifespan, and minimizes costly downtime, ensuring the continuous, reliable operation essential for mission-critical AI workloads.

High temperatures are the enemy of electronic components. Sustained exposure to elevated temperatures accelerates various degradation mechanisms, such as electromigration, material fatigue, and chemical reactions within the semiconductor. For expensive, high-performance components like AI GPUs (e.g., NVIDIA H100) and server CPUs (e.g., Intel Xeon), this can lead to premature failure, costing data centers significant capital and operational expenses.

Server CPU/GPU waterblocks directly mitigate this risk by keeping components significantly cooler than air cooling. By maintaining operating temperatures well below critical thresholds, they drastically reduce thermal stress. For example, a custom copper liquid cold plate designed for a 385W TDP component can keep its temperature rise to just 10.78°C above the coolant temperature (with R-ca = 0.028°C/W). This stable, lower temperature environment:

Extends Lifespan: Components last longer, delaying the need for costly replacements.

Reduces Failure Rates: Fewer components fail unexpectedly, improving overall system uptime.

Maintains Performance Over Time: Components retain their performance characteristics for a longer duration, avoiding gradual degradation.

In an AI data center, where hardware investments are substantial, extending the lifespan of each GPU server and its components by even a year or two can result in millions of dollars in savings. This makes liquid cooling for AI a strategic investment in the long-term health and financial viability of the cluster.

Temperature Range | Impact on Component Lifespan | Impact on Reliability |

High (>85°C) | Significantly Reduced | Low (High Failure Rate) |

Moderate (65-85°C) | Reduced | Moderate |

Low (<65°C) | Extended | High (Low Failure Rate) |

Beyond individual component lifespan, server CPU/GPU waterblocks contribute to the overall stability and uptime of the entire AI cluster. Overheating issues can lead to:

System Crashes/Reboots: When components reach critical temperatures, the system may automatically shut down or reboot to prevent damage, interrupting ongoing AI workloads.

Unpredictable Errors: Thermal stress can cause intermittent errors or instability that are difficult to diagnose, leading to wasted compute time and debugging efforts.

Maintenance Overheads: Frequent thermal-related issues require more technician time for troubleshooting, repairs, and component replacements.

By preventing these overheating scenarios, server CPU/GPU waterblocks ensure that the AI cluster operates with greater stability and reliability. This means AI training jobs run to completion without interruption, inference services remain continuously available, and critical research is not delayed. For a mission-critical AI data center, minimizing downtime is paramount, as every hour of lost compute time can translate into significant financial losses or missed opportunities.

The robust construction and leak-proof sealing of high-quality custom cold plates, often achieved through advanced manufacturing processes like vacuum brazing or FSW, are also crucial for long-term reliability. KINGKA's rigorous quality control, including multiple leak tests and dimensional inspections, ensures that every server CPU/GPU waterblock delivered is built for unwavering stability in the most demanding AI environments.

Implementing server CPU/GPU waterblocks in an AI cluster is a strategic decision that requires careful planning and consideration beyond just the waterblocks themselves. A holistic approach to the entire liquid cooling system is essential to maximize benefits and ensure successful deployment.

Implementing server CPU/GPU waterblocks for AI clusters requires careful consideration of the entire liquid cooling loop, including pump capacity, radiator sizing, coolant type, and leak detection systems. Customization for specific server architectures and AI workloads is crucial, alongside robust maintenance protocols and expert design support to ensure optimal thermal performance, reliability, and seamless integration within the AI data center infrastructure.

A server CPU/GPU waterblock is just one part of a larger liquid cooling ecosystem. Its effectiveness is entirely dependent on the proper design and implementation of the complete liquid cooling loop. Key considerations include:

Pump Capacity and Redundancy: The pump must be powerful enough to circulate the coolant at the required flow rate (e.g., 1.0 LPM per waterblock) against the system's total pressure drop. For mission-critical AI clusters, pump redundancy is essential to prevent single points of failure.

Radiator/CDU Sizing: The heat exchanger (radiator or Cooling Distribution Unit - CDU) must be adequately sized to dissipate the cumulative heat load from all the CPU waterblocks and GPU waterblocks in the cluster. For example, if a rack has 8 GPUs each dissipating 700W, the CDU must handle 5600W of heat.

Coolant Type and Quality: Choosing the right coolant (e.g., deionized water with anti-corrosion additives, glycol mixtures, or dielectric fluids) is crucial for system longevity and performance. Regular monitoring of coolant quality is also important.

Plumbing and Manifolds: The design of the tubing, quick-disconnects, and manifolds within the server and rack must minimize flow restriction and ensure even coolant distribution to all waterblocks.

Leak Detection and Safety: Implementing robust leak detection systems and automatic shut-off mechanisms is paramount to protect valuable hardware in an AI data center.

Working with an experienced thermal solutions provider like KINGKA, which offers one-stop service from design to manufacturing, is crucial for designing a balanced and reliable liquid cooling loop. Our expertise in custom cold plates extends to understanding how they integrate into the broader system.

System Component | Key Consideration | Impact on AI Cluster |

Pump | Flow rate, head pressure, redundancy. | Ensures adequate coolant circulation, prevents throttling. |

Radiator/CDU | Heat dissipation capacity, fan configuration. | Removes heat from the liquid, prevents system-wide temperature rise. |

Coolant | Type, anti-corrosion properties, electrical conductivity. | Protects components, maintains thermal performance, prevents shorts. |

Plumbing | Material, diameter, quick-disconnects, pressure drop. | Ensures efficient flow, simplifies maintenance, prevents leaks. |

Leak Detection | Sensors, automatic shut-off, alarm systems. | Protects hardware from damage, minimizes downtime in case of a leak. |

For optimal performance and reliability in an AI cluster, customization is often key. While standard server CPU/GPU waterblocks might fit some applications, highly specialized AI servers often benefit from custom cold plates tailored to their unique dimensions, power delivery, and thermal profiles.

Custom Design: A custom copper liquid cold plate can be designed to perfectly fit a specific GPU module or a multi-CPU motherboard, optimizing thermal contact and port placement for seamless integration. KINGKA's free technical design support, including thermal analysis and airflow simulations, helps create these bespoke solutions.

Regular Maintenance: Like any advanced system, liquid cooling requires regular maintenance. This includes checking coolant levels, inspecting for leaks, cleaning radiators, and potentially replacing coolant periodically. Establishing clear maintenance protocols is vital for long-term reliability.

Expert Support: Partnering with a manufacturer that has deep expertise in both thermal management and precision manufacturing (like KINGKA, with 15+ years of experience) provides invaluable support. This includes assistance with design, troubleshooting, and ensuring that the server CPU/GPU waterblocks meet the highest quality standards through rigorous checks (e.g., 4+ inspections, CMM, leak testing).

By considering these factors, AI data centers can successfully implement server CPU/GPU waterblocks to not only prevent overheating issues but also to unlock the full potential of their computational infrastructure, ensuring sustained performance, reliability, and a competitive edge in the rapidly evolving field of AI.

The intense computational demands of modern AI clusters present formidable thermal challenges that traditional air cooling can no longer adequately address. Server CPU/GPU waterblocks emerge as a critical and highly effective solution, directly preventing overheating issues that would otherwise cripple performance and reliability. By leveraging the superior heat transfer capabilities of liquid, these precision-engineered components ensure that high-TDP CPUs and GPUs can operate at their sustained peak performance, free from the constraints of thermal throttling.

The benefits for AI data centers are profound: faster AI model training, higher inference throughput, extended hardware lifespan, and significantly enhanced system stability. This translates directly into reduced operational costs, greater return on hardware investment, and a more resilient, future-proof infrastructure. While implementation requires careful consideration of the entire liquid cooling loop and often benefits from customization, the long-term gains in performance, reliability, and sustainability make server CPU/GPU waterblocks an indispensable technology for any serious AI cluster.

At KINGKA, we understand the unique and demanding thermal requirements of AI. With our 15+ years of experience as a one-stop thermal solutions provider, specializing in custom copper liquid cold plates and precision CNC parts, we are uniquely positioned to help AI data centers overcome their overheating challenges. Our expert team is ready to partner with you, from initial design and thermal analysis to manufacturing and delivery, ensuring your AI cluster runs cooler, faster, and more reliably.