Views: 21 Author: Site Editor Publish Time: 2025-09-10 Origin: Site

Server CPU/GPU waterblocks boost data center efficiency by enabling superior thermal management, directly removing up to 385W of heat per component, which prevents throttling, allows higher component density, and reduces overall energy consumption. This leads to sustained peak performance, lower Power Usage Effectiveness (PUE), and extended hardware lifespan, making them critical for modern, high-density data centers.

In today's data-driven world, data centers are the beating heart of digital infrastructure, constantly striving for more power, more speed, and more efficiency. As processors (both CPUs and GPUs) become increasingly powerful, they also generate immense heat, posing a significant challenge to traditional air-cooling methods. This is where advanced liquid cooling solutions, specifically server CPU/GPU waterblocks, step in as game-changers. These precision-engineered components are not just about keeping things cool; they're about fundamentally transforming how data centers operate, unlocking new levels of performance and dramatically boosting overall efficiency.

How Do Server CPU/GPU Waterblocks Enhance Thermal Performance?

What Specific Efficiency Gains Do Waterblocks Offer Data Centers?

How Do Waterblocks Enable Higher Density and Scalability in Data Centers?

What Role Do Custom Waterblocks Play in Optimizing Data Center Cooling?

What Are the Long-Term Benefits of Deploying Waterblocks in Data Centers?

Ever wondered how a small metal block can tame the fiery heat of a high-performance server CPU or GPU, far better than any fan? Server CPU/GPU waterblocks enhance thermal performance by directly absorbing heat from the component's surface and transferring it to a circulating liquid, which is far more efficient than air, allowing for rapid and consistent heat dissipation, even for components generating hundreds of watts.

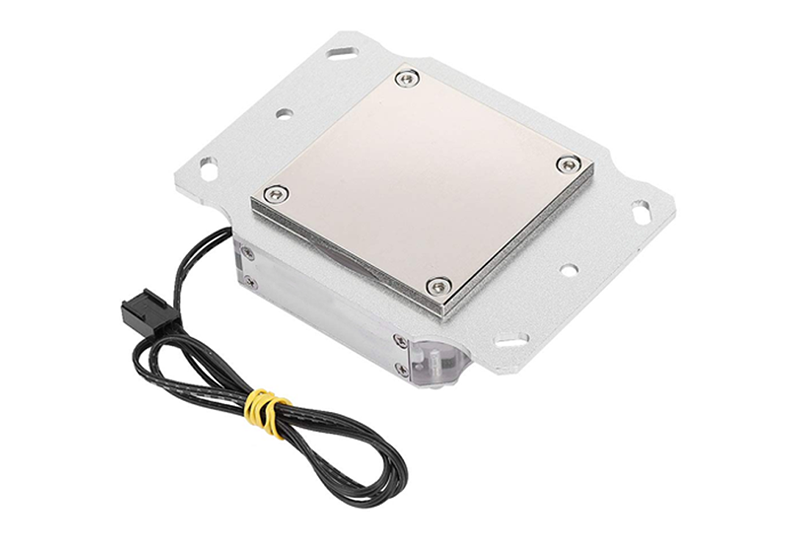

Server CPU/GPU waterblocks enhance thermal performance by leveraging copper's superior thermal conductivity (up to 400 W/m·K) and an optimized internal micro-channel design to directly absorb and transfer intense heat (e.g., 385W) from the processor to a liquid coolant. This direct contact and efficient heat exchange result in significantly lower operating temperatures and thermal resistance (e.g., 0.028°C/W) compared to air cooling, preventing throttling and ensuring peak performance.

The fundamental advantage of server CPU/GPU waterblocks lies in their ability to facilitate direct heat transfer from the component's Integrated Heat Spreader (IHS) or die to a liquid coolant. Unlike air cooling, which relies on fans to move air over a finned heatsink, liquid cooling places a highly conductive metal block (typically copper) directly onto the heat source. Copper, with a thermal conductivity of approximately 400 W/m·K, is vastly superior to air (around 0.026 W/m·K) and even aluminum (around 237 W/m·K) in its ability to conduct heat.

This means that heat generated by a powerful server CPU (e.g., an Intel Xeon Scalable processor) or a high-performance GPU (e.g., an NVIDIA H100 or A100) is rapidly absorbed by the copper base of the waterblock. For instance, a custom copper liquid cold plate designed for a 385W TDP processor can achieve a thermal resistance (R-ca) as low as 0.028°C/W. This incredibly low figure indicates that for every watt of heat, the temperature difference between the component's case and the coolant is minimal. This rapid and efficient heat removal is crucial for preventing the component from reaching its thermal limits, which would otherwise trigger thermal throttling and reduce performance.

Consider a GPU server running an AI training workload. The GPUs operate at near 100% utilization, generating maximum heat. A GPU waterblock ensures that this heat is immediately pulled away, allowing the GPU to maintain its boost clocks and complete the training faster, directly impacting the efficiency of the data center's computational output.

Cooling Medium | Thermal Conductivity (W/m·K) | Heat Transfer Efficiency | Application Suitability |

Copper | ~400 | Excellent | Server CPU/GPU Waterblocks, high-power components |

Aluminum | ~237 | Good | Standard heatsinks, moderate power components |

Air | ~0.026 | Poor | Low-power components, general electronics |

Water/Coolant | ~0.6 (for water) | Very Good | Liquid cooling loops, heat transport |

For example, a custom liquid cold plate might incorporate hundreds of tiny, precisely machined micro-channels. As the coolant flows through these channels, it picks up heat from the vast internal surface area. The design also optimizes the flow path to ensure uniform coolant distribution across the entire hot surface of the CPU or GPU, eliminating hot spots. This balance between maximizing surface area and maintaining an acceptable pressure drop (e.g., 3.60 psi @ 1.0 LPM for a specific waterblock) is critical. A high pressure drop would require a more powerful pump, increasing energy consumption.

In a high-performance computing (HPC) cluster, where every server CPU and GPU is pushed to its limits, the internal design of each CPU waterblock and GPU waterblock ensures that the collective heat load is efficiently managed. This allows the entire cluster to operate at peak performance consistently, without individual components becoming thermal bottlenecks. The precision manufacturing, often involving advanced CNC machining, is essential to create these complex internal geometries reliably and repeatedly.

Beyond just keeping components cool, server CPU/GPU waterblocks translate directly into tangible efficiency gains for data centers, impacting everything from energy consumption to operational costs. These aren't just marginal improvements; they represent a fundamental shift in how data centers can optimize their resources.

Server CPU/GPU waterblocks offer specific efficiency gains by significantly reducing Power Usage Effectiveness (PUE) through lower cooling energy demands, enabling sustained peak performance that maximizes computational output per watt, and extending hardware lifespan. This results in lower operational costs, reduced thermal throttling, and a more sustainable data center footprint, directly boosting overall data center efficiency.

One of the most significant efficiency gains from deploying server CPU/GPU waterblocks is the reduction in Power Usage Effectiveness (PUE). PUE is a metric that measures how efficiently a data center uses energy, calculated by dividing the total facility power by the IT equipment power. A PUE of 1.0 is ideal, meaning all energy goes to IT equipment. Traditional air-cooled data centers often have PUEs ranging from 1.5 to 2.0 or higher, meaning a substantial amount of energy is wasted on cooling infrastructure.

Liquid cooling, particularly direct-to-chip solutions using CPU waterblocks and GPU waterblocks, drastically reduces the energy required for cooling. Liquid is far more effective at transporting heat than air, meaning less energy is needed to move the heat away from the servers and out of the data center. Instead of massive CRAC (Computer Room Air Conditioner) units blasting cold air, liquid cooling systems can use smaller, more efficient pumps and heat exchangers. This direct heat removal allows data centers to operate at higher ambient temperatures, further reducing the energy needed for facility cooling.

For example, a data center transitioning from air cooling to liquid cooling for its high-density AI servers might see its PUE drop from 1.8 to 1.2. This 0.6 reduction in PUE translates to massive energy savings over time, directly impacting the data center's operating expenses and carbon footprint. The efficiency of a custom copper liquid cold plate in handling a 385W TDP component means that the cooling system doesn't have to work as hard to maintain optimal temperatures.

PUE Value | Interpretation | Energy Efficiency Impact |

2.0 | 100% of IT energy is used for non-IT functions (e.g., cooling, lighting). | Very inefficient; high operational costs and environmental impact. |

1.5 | 50% of IT energy is used for non-IT functions. | Moderately inefficient; significant room for improvement. |

1.2 | 20% of IT energy is used for non-IT functions. | Highly efficient; substantial energy savings and reduced environmental footprint. |

1.0 | All energy goes directly to IT equipment (theoretical ideal). | Maximum efficiency; minimal operational costs for cooling. |

Another critical efficiency gain is the ability to maximize computational output per watt. In air-cooled environments, high-power CPUs and GPUs often hit thermal limits, leading to "thermal throttling." This means the processor automatically reduces its clock speed to prevent overheating, effectively wasting the potential performance it was designed to deliver.

Server CPU/GPU waterblocks prevent this. By maintaining consistently low operating temperatures, they allow processors to run at their maximum turbo frequencies for longer periods, even under sustained heavy loads. This means that every watt of power consumed by the CPU or GPU translates into more actual computational work done. For a data center, this directly impacts the return on investment for expensive hardware.

Consider a GPU server farm dedicated to rendering or scientific simulations. With GPU waterblocks, each GPU can render more frames or complete more calculations per hour than if it were air-cooled and throttling. This increased throughput means the data center can complete more jobs with the same number of servers, or achieve the same output with fewer servers, leading to significant cost savings in hardware, space, and energy. The ability of a copper liquid cold plate to handle a 385W TDP with a low R-ca of 0.028°C/W ensures that the processor's full potential is consistently realized.

As data centers strive to pack more computational power into smaller footprints, traditional air cooling quickly becomes a bottleneck. Server CPU/GPU waterblocks are a key enabler for higher density and scalability, allowing data centers to grow their processing capabilities without expanding their physical footprint or encountering thermal limitations.

Server CPU/GPU waterblocks enable higher density and scalability in data centers by efficiently removing concentrated heat, allowing more powerful CPUs and GPUs to be packed into each server rack. This reduces the physical space required for a given computational load, simplifies airflow management, and supports future upgrades to even higher-TDP components, making data centers more compact, powerful, and scalable.

One of the most immediate benefits of server CPU/GPU waterblocks is their ability to facilitate higher component density. Air-cooled servers require significant space between components and within racks for airflow. As heat loads increase, so does the need for more space, larger fans, and wider aisles, limiting how many powerful servers can fit into a given rack or data center floor.

Liquid cooling, by directly removing heat from the source, drastically reduces these spatial requirements. CPU waterblocks and GPU waterblocks are compact and allow servers to be designed with much tighter component spacing. This means:

More CPUs/GPUs per server: A single server chassis can house more high-TDP processors when liquid-cooled, as the thermal envelope is no longer dictated by air movement.

More servers per rack: Racks can be populated with a greater number of powerful servers, significantly increasing the computational density of each rack.

Reduced data center footprint: For a given computational capacity, a liquid-cooled data center can occupy a much smaller physical space compared to an air-cooled one.

For example, a rack that previously held 20 air-cooled servers might now accommodate 40 liquid-cooled servers, each potentially more powerful. This translates to substantial savings in real estate costs, construction, and infrastructure. The ability of a custom copper liquid cold plate to handle 385W in a compact form factor (e.g., 117.8x78.0x10.3mm) is a direct enabler of this high-density computing.

Cooling Method | Server Density Potential | Rack Density Potential | Data Center Footprint |

Air Cooling | Moderate | Moderate | Larger |

Liquid Cooling | High | Very High | Smaller |

The trend in processor development is clear: more cores, higher clock speeds, and increased power consumption, leading to ever-higher TDPs. Air cooling is rapidly approaching its practical limits for these next-generation components. Server CPU/GPU waterblocks offer a future-proof solution, providing the thermal headroom necessary to accommodate these advancements.

By investing in liquid cooling infrastructure now, data centers can ensure they are ready for future generations of high-performance CPUs and GPUs, such as upcoming Intel Xeon or NVIDIA Blackwell architectures, which are expected to push TDPs even higher. A robust liquid cooling system, built around efficient CPU waterblocks and GPU waterblocks, can scale to meet these demands without requiring a complete overhaul of the cooling strategy. This protects hardware investments and allows for seamless upgrades.

Furthermore, liquid cooling simplifies airflow management within the data center. With heat being removed directly by liquid, the need for complex hot/cold aisle containment and high-velocity air movement is reduced. This creates a more stable and predictable thermal environment, making it easier to scale operations and integrate new technologies without encountering unexpected thermal challenges. KINGKA's expertise in custom liquid cold plates ensures that solutions are designed not just for today's needs but with an eye toward future scalability and performance demands.

While off-the-shelf waterblocks exist, the unique demands of enterprise data centers, with their diverse server architectures and specific performance targets, often necessitate tailored solutions. Custom server CPU/GPU waterblocks play a crucial role in optimizing data center cooling by providing precisely engineered thermal management that perfectly matches the specific component, server chassis, and overall cooling loop requirements.

Custom server CPU/GPU waterblocks optimize data center cooling by providing precisely tailored thermal solutions that perfectly match unique server architectures, CPU/GPU footprints, and cooling loop specifications. This ensures maximum thermal contact, optimized fluid dynamics, and seamless integration, leading to superior heat dissipation (e.g., 0.028°C/W R-ca for 385W TDP), reduced pressure drop, and enhanced overall system efficiency and reliability for high-performance computing.

Data centers are not monolithic; they house a wide array of server types, from dense blade servers to specialized GPU-accelerated systems. Each server chassis, motherboard layout, and processor (CPU or GPU) has unique physical dimensions and thermal characteristics. An off-the-shelf waterblock, designed for a generic application, may not provide optimal contact, fit correctly, or integrate seamlessly with the existing plumbing.

Custom server CPU/GPU waterblocks are designed from the ground up to precisely match these specific requirements. This involves:

Exact Footprint Matching: Ensuring the waterblock's base perfectly covers the CPU's or GPU's IHS, maximizing thermal contact. For example, a custom copper liquid cold plate can be designed to fit the exact dimensions of a specific Intel EGS CPU socket or a custom GPU module.

Optimized Port Placement: Positioning inlet and outlet ports to align perfectly with the server's internal plumbing, simplifying installation and reducing the need for complex, restrictive tubing runs.

Chassis Integration: Designing the waterblock to fit within the server's specific height and width constraints, ensuring compatibility with rack-mounted systems.

Flow Path Optimization: Customizing the internal micro-channel or fin design to achieve the ideal balance between thermal performance and pressure drop for the specific coolant flow rate available in the data center's liquid cooling loop.

This level of customization ensures that every watt of heat is efficiently removed, preventing thermal bottlenecks and allowing the server to operate at its peak. KINGKA's free technical design team support, including thermal analysis and airflow simulations, is invaluable in developing these bespoke solutions, ensuring that the custom cold plates are perfectly integrated and perform optimally.

Customization Aspect | Benefit for Data Center Cooling |

Exact Footprint | Maximizes thermal contact, eliminates hot spots on CPU/GPU. |

Port Placement | Simplifies plumbing, reduces flow restriction, improves aesthetics. |

Chassis Integration | Ensures compatibility with existing server hardware, prevents physical interference. |

Internal Flow Path | Optimizes heat transfer efficiency while minimizing pressure drop for the specific system. |

Beyond physical fit, custom server CPU/GPU waterblocks allow for performance tuning tailored to specific data center workloads and the characteristics of the overall liquid cooling system. Different applications (e.g., AI inference vs. scientific simulation) might have varying thermal profiles, and different data centers might use different coolants or pump configurations.

A custom design can account for:

Coolant Properties: Adjusting internal channel dimensions and materials to optimize performance for specific coolants (e.g., deionized water, glycol mixtures, dielectric fluids).

Flow Rate and Pressure Drop: Designing the waterblock to achieve optimal thermal performance at the specific flow rates and pressures provided by the data center's cooling distribution unit (CDU) and pumps. This ensures the entire system operates efficiently without overworking the pumps or creating excessive resistance. For example, ensuring a 385W TDP cold plate operates at its optimal 3.60 psi @ 1.0 LPM.

Target Temperatures: Engineering the waterblock to maintain specific component temperatures, which might vary depending on the desired performance, longevity targets, or specific requirements of the CPUs/GPUs (e.g., Intel EGS processors).

This granular control over design parameters ensures that the custom cold plates deliver not just generic cooling, but precisely optimized thermal management that maximizes the efficiency and reliability of the entire data center infrastructure. KINGKA's 15+ years of experience in precision manufacturing and thermal solutions positions us to deliver these highly specialized and optimized server CPU/GPU waterblocks.

Deploying server CPU/GPU waterblocks in data centers isn't just about immediate performance gains; it's a strategic investment that yields significant long-term benefits, impacting operational costs, hardware lifespan, and environmental sustainability. These advantages make liquid cooling a compelling choice for future-proof data center design.

Deploying server CPU/GPU waterblocks offers long-term benefits including significantly extended hardware lifespan by maintaining lower, more stable operating temperatures, reduced total cost of ownership (TCO) through lower energy consumption (PUE) and fewer hardware replacements, and enhanced environmental sustainability. This strategic investment ensures data centers remain efficient, reliable, and competitive for years to come, especially for high-TDP components like those on Intel EGS platforms.

One of the most compelling long-term benefits of server CPU/GPU waterblocks is the significant extension of hardware lifespan. Electronic components, particularly high-performance semiconductors like server CPUs and GPUs, degrade faster at higher operating temperatures. By keeping these components consistently cooler and within their optimal temperature ranges, liquid cooling dramatically reduces thermal stress.

Reduced Degradation: Lower temperatures slow down the electromigration and other physical processes that lead to component wear and eventual failure. This means that expensive CPUs (e.g., Intel EGS processors) and GPUs can operate reliably for many more years than if they were subjected to higher, fluctuating temperatures under air cooling.

Fewer Replacements: An extended lifespan translates directly into fewer hardware replacements. This reduces capital expenditure (CapEx) on new components and minimizes the operational expenditure (OpEx) associated with maintenance, technician time, and potential downtime for component swaps.

Lower Downtime Costs: Hardware failures can lead to costly downtime for mission-critical applications. By improving reliability, server CPU/GPU waterblocks contribute to higher uptime, protecting revenue and ensuring continuous service delivery.

For example, if a GPU waterblock extends the life of an NVIDIA H100 GPU from 3 years to 5 years, the data center saves the cost of replacing that GPU and avoids any associated downtime for two additional years. This significantly lowers the Total Cost of Ownership (TCO) for the entire server infrastructure. KINGKA's commitment to quality, through advanced manufacturing and rigorous testing, ensures that our custom copper liquid cold plates deliver this long-term reliability.

Benefit | Impact on Data Center Operations |

Extended Hardware Lifespan | Fewer component replacements, reduced CapEx, lower maintenance OpEx. |

Reduced Downtime | Higher uptime for mission-critical applications, protected revenue. |

Lower Energy Consumption (PUE) | Significant OpEx savings, reduced carbon footprint. |

Increased Performance Density | Maximized utilization of physical space, higher computational output per rack. |

Beyond financial benefits, deploying server CPU/GPU waterblocks contributes significantly to environmental sustainability, which is becoming an increasingly important factor for data centers globally.

Reduced Energy Consumption: As discussed, liquid cooling lowers PUE, meaning less electricity is consumed for cooling. This directly translates to a smaller carbon footprint and reduced environmental impact. Data centers can achieve their sustainability goals more effectively.

Heat Reuse Potential: Liquid cooling systems remove heat in a concentrated form at a higher temperature than air. This "waste heat" can potentially be captured and reused for other purposes, such as heating office buildings or district heating systems, further enhancing energy efficiency and sustainability. This concept, known as "heat reuse," is a major area of innovation for future data centers.

Competitive Advantage: Data centers that can demonstrate superior energy efficiency and sustainability often gain a competitive advantage, attracting environmentally conscious clients and meeting increasingly stringent regulatory requirements. Investing in advanced thermal management with server CPU/GPU waterblocks positions a data center as a leader in efficiency and responsible operation.

By embracing liquid cooling solutions like custom copper liquid cold plates, data centers are not just optimizing their current operations; they are building a more sustainable, resilient, and competitive infrastructure for the future, capable of handling the ever-growing demands of high-performance computing and AI workloads. KINGKA, with its one-stop thermal solutions and 15+ years of experience, is a trusted partner in this journey towards more efficient and sustainable data centers.

The relentless drive for computational power in modern data centers has made advanced thermal management not just a luxury, but a necessity. Server CPU/GPU waterblocks stand out as a pivotal technology, fundamentally transforming how data centers operate and achieve efficiency. By enabling direct, highly efficient heat transfer, these precision-engineered components allow processors to run at peak performance without throttling, even when dissipating hundreds of watts of heat.

The benefits are clear and far-reaching: significantly reduced Power Usage Effectiveness (PUE) leads to substantial energy savings and lower operational costs. The ability to pack more powerful CPUs and GPUs into smaller footprints drives higher density and scalability, optimizing real estate and future-proofing infrastructure. Furthermore, the extended hardware lifespan and enhanced reliability contribute to a lower Total Cost of Ownership and a more sustainable environmental footprint. For specialized needs, custom server CPU/GPU waterblocks ensure perfect integration and optimized performance for unique server architectures and demanding workloads, including those on Intel EGS platforms.

For data centers aiming to maximize performance, minimize costs, and build a sustainable future, investing in high-quality server CPU/GPU waterblocks is a strategic imperative. Partnering with an experienced provider like KINGKA, with over 15 years in custom thermal solutions and precision manufacturing, ensures that these critical components are designed and delivered to the highest standards, empowering your data center to achieve unparalleled efficiency and reliability.