Views: 5 Author: Site Editor Publish Time: 2025-12-24 Origin: Site

AI workloads push GPUs to extreme heat levels, risking throttling, hardware failures, and stalled progress in training massive models. Air cooling struggles with dense clusters, wasting energy and space while limiting scale. Liquid-cooled GPU systems solve this by directly absorbing and transferring heat via coolant, enabling denser setups, lower energy use, and uninterrupted high-performance computing for critical AI tasks.

Picture scaling an AI inference cluster to handle real-time queries for millions—liquid-cooled GPU systems keep everything running cool, efficient, and reliable, turning potential bottlenecks into seamless operations.

Table of Contents

How Do Liquid-Cooled GPU Systems Manage High Heat Densities?

What Makes Them Essential for System Reliability and Uptime?

In modern AI clusters, GPUs like NVIDIA H100 pack over 700W per unit, creating heat fluxes that overwhelm air-based methods in tight rack spaces.

Liquid-cooled GPU systems are critical for managing high heat densities by circulating coolant directly to GPU surfaces, dissipating up to 1000W per module with uniform temperature control, preventing hotspots that could degrade performance in dense 8-GPU servers.

This direct contact outperforms air's limitations in compact environments.

Examples: NVIDIA's HGX platforms with manifold-integrated cold plates; custom setups in large-scale AI supercomputers using water-glycol mixtures for 50kW+ racks.

Theoretical basis: Coolant's high specific heat capacity (4x that of air) enables efficient convection, reducing thermal resistance to 0.05°C/W. Trade-offs: Initial plumbing complexity versus 2-3x higher density; mitigates pump failures through redundant loops.

Practical impacts: Allows 60-80 GPUs per rack versus 20-30 with air, boosting compute power in limited data center footprints.

Density Metric | Air Cooling | Liquid Cooling |

Power per GPU | 300-500W | 700-1000W+ |

GPUs per Rack | 20-30 | 60-80 |

Heat Flux Handling | 20-30 kW/rack | 50-100 kW/rack |

Practical advice: Monitor coolant flow rates (0.5-2 GPM per GPU) with sensors; perform leak tests during installation to ensure integrity in high-density setups.

AI training consumes massive power, with clusters often exceeding 1MW, where inefficient cooling inflates electricity bills and PUE ratios.

Liquid-cooled GPU systems boost energy efficiency by capturing heat at the source and reusing it for facility heating, cutting PUE to under 1.1 and reducing operational costs by 30-50% compared to air-cooled alternatives in large-scale inference farms.

Targeted cooling minimizes fan energy waste.

Examples: Azure AI clusters recycling coolant heat for office warming; Equinix data centers achieving 40% energy savings with immersion cooling variants.

Theoretical basis: Liquid's superior heat transfer coefficient (up to 10x air) lowers required airflow, dropping auxiliary power from 20% to 5% of total. Trade-offs: Higher upfront costs offset by 2-3 year ROI; requires compatible dielectric fluids for immersion.

Practical impacts: Reduces carbon footprint by 20-40% through lower grid draw and heat reuse in district systems.

Efficiency Factor | Air System PUE | Liquid System PUE |

Baseline | 1.5-2.0 | 1.05-1.2 |

Energy Savings | Baseline | 30-50% |

Heat Reuse Potential | Low | High |

Advice: Integrate with chiller-free designs using ambient cooling; audit PUE quarterly to optimize flow temperatures (20-40°C).

Expanding AI clusters demands modular growth without overhauling facilities, where air limits rack power to 20-30kW.

Liquid-cooled GPU systems facilitate scalability by supporting 50-100kW racks, allowing seamless addition of nodes for exascale AI training without footprint expansion, critical for hyperscalers handling petabyte datasets.

Modular manifolds simplify upgrades.

Examples: CoreWeave's liquid-cooled pods scaling to 1000+ GPUs; IBM's hybrid systems for enterprise AI inference.

Theoretical basis: Closed-loop systems maintain consistent performance across scales via pressure-balanced distribution. Trade-offs: Infrastructure retrofits versus greenfield ease; scalable pumps handle variable loads.

Practical impacts: Speeds deployment by 50%, enabling rapid model iteration in dynamic AI environments.

Scale Parameter | Air Limits | Liquid Enables |

Rack Power | 20-30kW | 50-100kW+ |

Cluster Expansion | Slow, space-heavy | Modular, dense |

Deployment Time | Months | Weeks |

Testing: Simulate load balancing with software; ensure quick-connect fittings for hot-swappable scaling.

AI inference requires 99.999% availability, but heat-induced failures in air-cooled setups cause costly downtimes.

Liquid-cooled GPU systems ensure reliability by maintaining GPU temperatures below 60°C under full load, extending MTBF by 2-3x and minimizing throttling events that disrupt training cycles in multi-node clusters.

Uniform cooling prevents thermal runaway.

Examples: Platforms with redundant coolant paths for Blackwell GPUs; Dell's AI servers logging zero thermal shutdowns in tests.

Theoretical basis: Instant heat absorption stabilizes junctions, reducing electromigration. Trade-offs: Monitoring overhead versus air's simplicity; integrated sensors detect anomalies early.

Practical impacts: Boosts uptime to five nines, critical for real-time AI like autonomous systems.

Reliability Metric | Air Cooling MTBF | Liquid MTBF |

GPU Lifespan | 3-5 years | 7-10 years |

Throttling Incidence | High under load | Minimal |

Uptime Achievement | 99.9% | 99.999% |

Advice: Implement predictive maintenance; flush coolant annually to avoid corrosion.

Next-gen chips like NVIDIA Blackwell exceed 1000W, demanding cooling beyond air's capabilities for sustained tensor core performance.

Liquid-cooled GPU systems are vital for advanced architectures by providing precise, on-die cooling that sustains peak FLOPS during prolonged training, handling complex neural networks without performance dips.

Adaptable plates fit evolving designs.

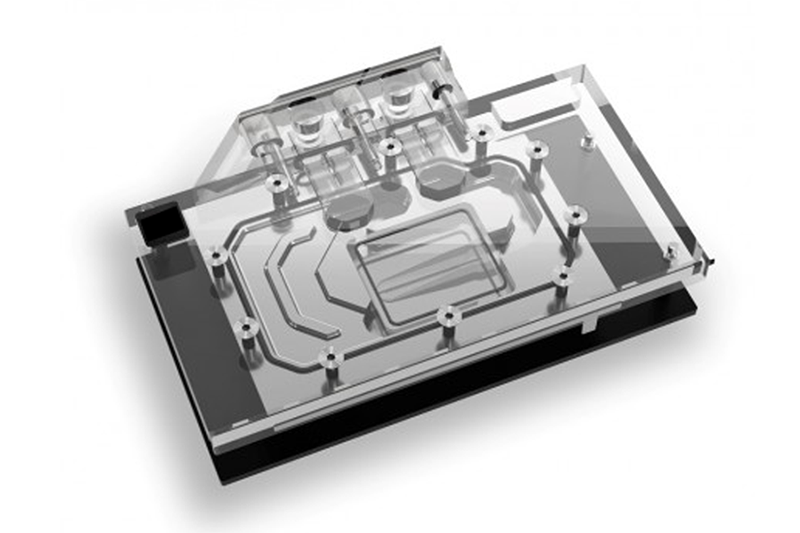

Examples: AMD MI300X setups with vacuum-brazed cold plates; custom FSW plates for Hopper-series in research clusters.

Theoretical basis: Microchannel integration enhances boundary layer disruption for better heat flux. Trade-offs: Custom tooling costs versus off-the-shelf air; supports multi-chip modules.

Practical impacts: Enables 4x faster training on large language models.

GPU Model | Power Draw | Cooling Requirement |

H100 | 700W | Direct Liquid |

Blackwell | 1000W+ | Advanced Immersion |

Testing: Benchmark FLOPS with thermal imaging; optimize coolant viscosity for new dies.

Noisy fans in air-cooled clusters disrupt colocation and consume extra power, while AI's energy hunger strains grids.

Liquid-cooled GPU systems reduce noise to near-silent levels by eliminating high-RPM fans and lower environmental impact through 30-40% less power draw, aligning with sustainable AI goals in green data centers.

Passive pumps ensure quiet operation.

Examples: Immersion-cooled AI farms cutting emissions; designs saving 20% on carbon via efficiency.

Theoretical basis: Fanless designs drop acoustics below 30dB; efficient heat removal curbs overall consumption. Trade-offs: Fluid disposal versus air's zero waste.

Practical impacts: Supports ESG compliance, reducing e-waste from shorter hardware lifespans.

Impact Area | Air System | Liquid System |

Noise Level | 50-70dB | <30dB |

Carbon Reduction | Baseline | 20-40% |

Power Consumption | High auxiliary | Minimal |

Advice: Use biodegradable coolants; integrate with renewable energy for net-zero ops.

Thermal limits in air cooling force clock speed reductions, slowing epoch times in distributed training.

Liquid-cooled GPU systems accelerate processes by sustaining maximum clock speeds, cutting training times by 20-50% for models like GPT-4 equivalents and enabling real-time inference in edge AI clusters.

Consistent cooling unlocks full potential.

Examples: GPU servers halving inference latency; setups for AI research with overclocking support.

Theoretical basis: Lower junctions allow higher voltages without instability. Trade-offs: Power spikes versus throughput gains.

Practical impacts: Speeds drug discovery AI by days.

Speed Metric | Air-Cooled Time | Liquid-Cooled Time |

Training Epoch | 10 hours | 5-8 hours |

Inference Latency | 200ms | 100-150ms |

Overclock Potential | Limited | +10-20% |

Advice: Pair with NVLink for multi-GPU; profile workloads to fine-tune cooling curves.

Liquid-cooled GPU systems are indispensable for overcoming AI's thermal hurdles, delivering density, efficiency, and speed that propel training and inference forward. With over 15 years as a one-stop heat solutions provider, KINGKA offers customized liquid cold plates—like vacuum-brazed and FSW designs—for GPU clusters, backed by thermal simulations and precision CNC manufacturing. Contact sales2@kingkatech.com to engineer reliable cooling that powers your AI ambitions.